ICDAR 2023 Competition on Harvesting Answers and Raw Tables

from Infographics (CHART-Infographics)

Summary

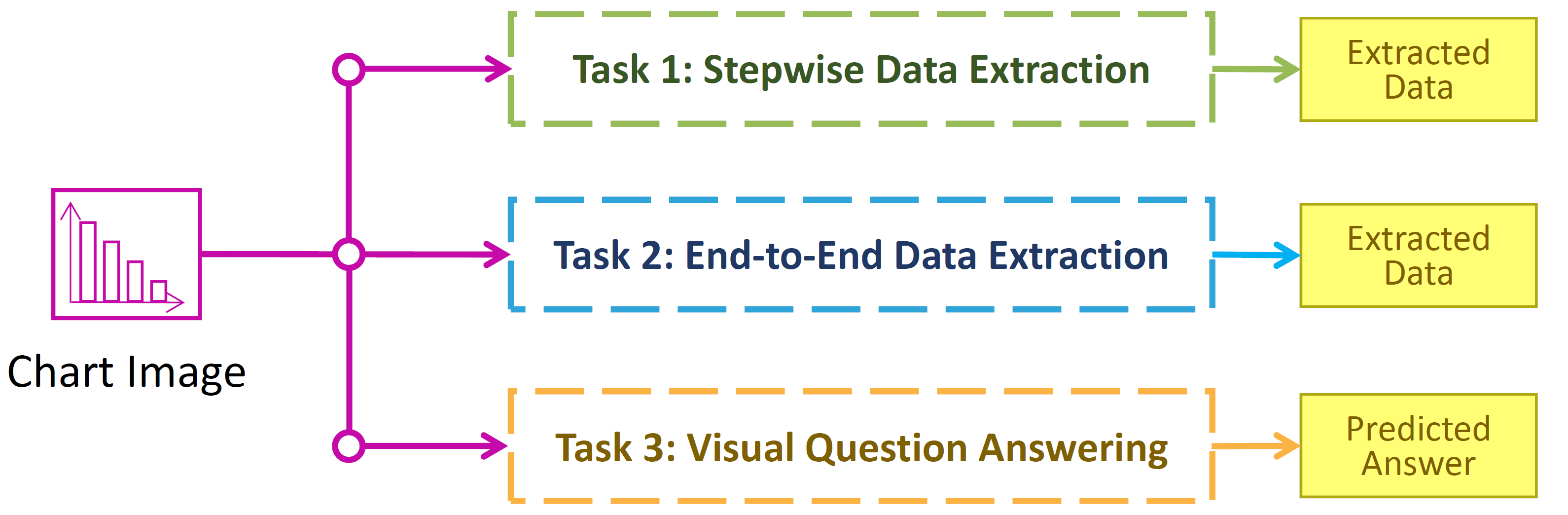

This competition is composed of a series of task and sub-tasks related to chart analysis. The first task, Stepwise Data Extraction, is composed of a series of 6 sub-tasks for chart data extraction, which when put together as a pipeline go from an input chart image to a CSV file representing the data used to create the chart. The second task, End-to-end Data Extraction, evaluates methods that perform the whole chart data extraction pipeline from the chart image without receiving intermediate inputs. The third task, Visual Question Answering, evaluates methods that can take chart images and question related to the chart in the image and can produce the right answers to the given question. Entrants in the competition may choose to participate in any number of sub-tasks, which are evaluated in isolation, such that solving previous sub-tasks is not necessary. We hope that such decomposition of the problem of chart image analysis will draw broad participation from the Document Analysis and Recognition (DAR) community.

Competition Updates

| Apr 15, 2023 | Leaderboards are out. |

| Apr 13, 2023 | Competition is cancelled. |

| Apr 03, 2023 | Testing dataset for Tasks 3 is released. |

| Mar 29, 2023 | Testing dataset for Tasks 1 and 2 is released. |

| Mar 29, 2023 | Competition Schedule Updated |

| Mar 18, 2023 | Testing dataset released date changed |

| Feb 11, 2023 | Training dataset is released. |

| Dec 30, 2022 | Registration is now open! |

| Dec 29, 2022 | Previous CHART-Info links: ICPR 2022 ICPR 2020 ICDAR 2019 |

Background

Charts are a compact method of displaying and comparing data. In scientific publications, charts and graphs are often used to to summarize results, comparison of methodologies, emphasize the reasoning behind key aspects of the scientific process, and justification of design choices, to name a few. Automatically extracting data from charts is a key step in understanding the intent behind a chart which could lead to a better understanding of the document itself.

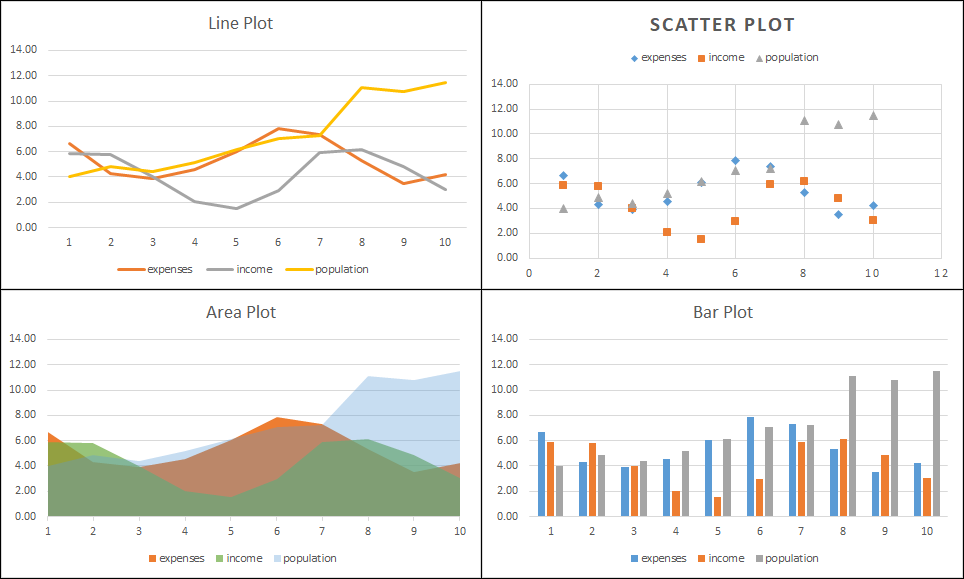

Example chart types to use in the proposed competition. Note that all these were generated from the same tabular data.

The DAR community has displayed a continued interest in classifying types of charts as well as processing charts to extract data. In the past decade, multiple applications have been built around automatic processing of charts such as retrieval, textual summarization of charts, making charts more accessible on the web, automatically redesigning charts, automatically assessing chart quality, preservation of charts from historical documents, chart data plagiarism detection, bibliometrics, visual question answering and accelerating discovery of new materials.

Competition Outline

Dataset

Our previous competitions used both real and synthetic charts datasets for all tasks. For ICDAR 2023, we are providing a extended UB-UNITEC PMC dataset, which contains real charts extracted from Open-Access publications found in the PubMedCentral. (PMC). Following the same protocol, we only picked images released under a Creative Commons By Attribution license (CC-BY), which allows us to redistribute them to the competition participants.

For this competition, UB-UNITEC PMC has a new, larger training set (by merging training and testing sets from previous competition), and a novel testing set that is also collected and annotated on real charts. Participants can use additional datasets (e.g. Adobe Synth from previous competition) to improve the performance of their framework, as long as it is notified in their final result.

Evaluation

On March, 2023, we will release the test datasets, and by March 15th, 2023 competition participants are expected submit the following:

- Predictions on each test dataset

- A short but complete system description

The organizers will tabulate the results for each task and present it at ICDAR 2023 in San Jose, California, USA . Note that you do not need to attend ICDAR 2023 to participate in this competition.

The tasks and sub-tasks considered in this competition are

-

Task 1: Stepwise Data Extraction

- Sub-task 1.1: Chart Image Classification (e.g. bar, box, line)

- Sub-task 1.2: Text Detection and Recognition

- Sub-task 1.3: Text Role Classification (e.g. title, x-axis label)

- Sub-task 1.4: Axis Analysis

- Sub-task 1.5: Legend Analysis

-

Sub-task 1.6: Data Extraction

- Sub-task 1.6.a: Plot Element Detection/Classification

- Sub-task 1.6.b: Raw Data Extraction

- Task 2: End-to-end Data Extraction

- Task 3: Visual Question Answering

Each subtask is evaluated in isolation, meaning that systems have access to the ideal output (i.e. Ground Truth) of previous subtasks. Subtasks 1.3-1.5 are considered parallel tasks and only receive the outputs of subtasks 1.1 and 1.2. For example, the input for the Axis Analysis sub-task is the chart image, chart type, text bounding boxes, and text transcriptions. Each sub-task has its own evaluaton metric, detailed on the Tasks page.

Participants are not obligated to perform all subtasks and may submit test results for any set of subtasks they wish. Methods may be submitted for the end-to-end data extraction task where systems are only given the chart image (no intermediate inputs) and are expected to produce the raw data used to create the chart (same output as subtask 1.6.b). Additionally, methods may be submitted for the visual question answering task where system also only given the chart image and a question about the chart, and they are expected to produce the right answer based on the chart.

Registration

Register here.

Acknowledgements

The creation of our manually curated real CHART dataset was partially supported by the National Science Foundation under Grant No.1640867 (OAC/DMR).